Python is the most frequently used language as it is easy to understand. However, the term this interpreted code sometimes leads to performance bottleneck problems, which are generally encountered in applications needing a lot of computer power. Data pipelines, web services, and scientific simulations deal with optimizing Python code for performance, especially for fast processing, resource management, and ease of user interaction.

This post will delve into some actionable ways of supercharging Python code, from low-level to high-level architectural improvements.

What is Optimization?

Python code optimization enhances program code to consume fewer resources, run faster, or handle increased workloads better. It is not about smartness but about getting rid of unsatisfactory things. Optimization aims to find a spot between performance direction and maintainability so that the code is still readable and debuggable.

Why is Optimizing Python Code needed?

There are several performance issues in Python. In some cases, one needs to do extra coding while optimizing Python code because of the nature of the operations being performed. Let’s start with some everyday performance issues in Python to understand the need for optimization in the first place.

- Reduce the usage of CPU, memory, and I/O resources.

- Scalability: Handle growing workloads without proportional resource increases.

- User Experience: Faster responses in web apps, real-time analytics, or GUIs.

- Cost Optimization: Reduce spending on cloud resources based on optimizing resource usage.

Missing Techniques for Optimizing Python Code

Not every technique gets the attention it deserves. Here are a few recommendations that may not be on your radar:

1. Caching & Memoization

Use the in-built function called lru_cache from the functools module. This method helps to avoid redundant calculations. Since results are cached, your code is exempted from doing repeated work. This kind of approach can save time when it comes to repetitive tasks.

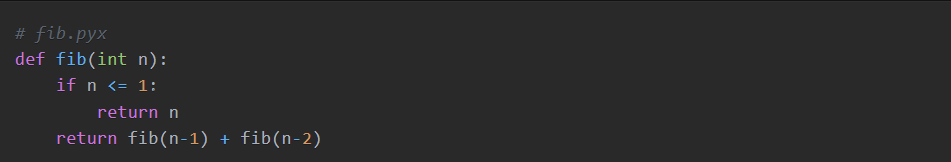

2. Cython

Cython allows you to compile Python to C. Compiled code executes faster. Using Cython means you can harness the speed of C for portions of your code. This method is beneficial when you have intensive numerical calculations to do.

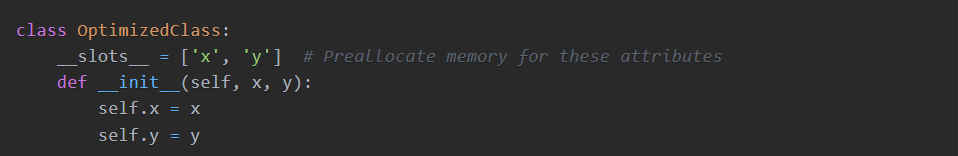

3. Memory Optimization with slots

Custom classes that use slots use less memory. They do so by passing on the overhead of dict. This trick can be helpful when you want to create many objects quickly.

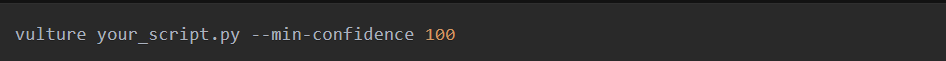

4. Avoid Unnecessary Imports

Every import has some overhead when your program starts. Import only what you need. This reduces memory and speeds up startup. Review which libraries are necessary and optimize your code. Tools like Vulture can identify dead code.

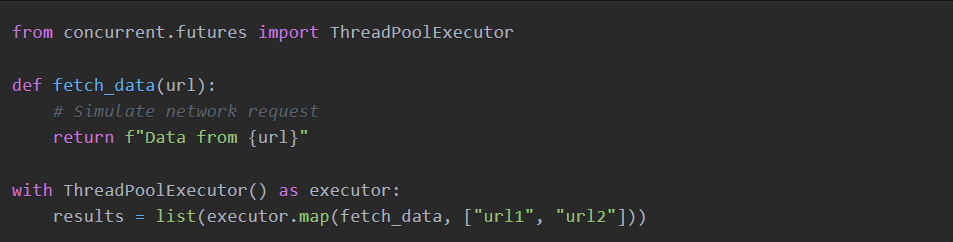

5. Concurrency & Parallelism

Python has built-in support for concurrency using modules such as concurrent.futures and asyncio. Overall, use these for I/O-bound tasks, except for the above. They allow you to perform many operations simultaneously, reducing waiting time and improving performance.

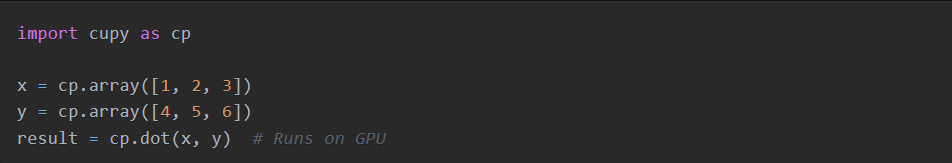

6. GPU Acceleration with CuPy

If you need numerical computing, you can use CuPy to benefit from GPUs in your code. However, processing data on a GPU can be significantly quicker than using a CPU, and it is super helpful when we do heavy calculations. Replace NumPy with CuPy for GPU-accelerated operations.

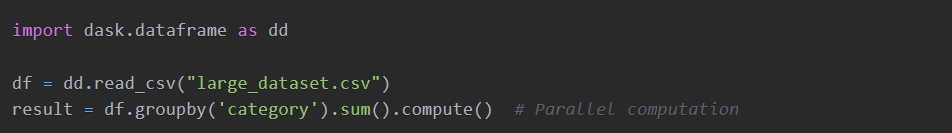

7. Dask for Big Data

Dask is a library for big data. It aids in parallel computation and lazy evaluation. Dask then breaks the work into smaller pieces and executes them effectively without consuming excessive memory.

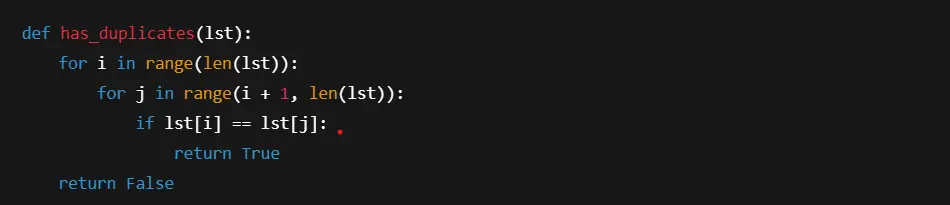

8. Algorithmic Improvements

Selecting improved algorithms can achieve optimization. When alternatives exist, select O(n log n) algorithm complexity instead of O(n²). Investigate available data structures together with sorting procedures. Better algorithms improve the speed of computer operations.

Bad (O(n²)):

Better (O(n)):

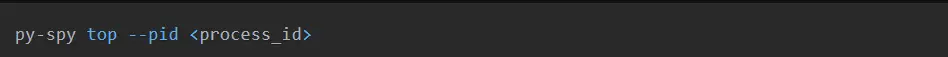

9. Advanced Profiling Tools

Profiling your Python code becomes possible using tools such as Py-Spy. These tools, which require no code modifications, enable real-time profiling and enable you to identify bottlenecks smoothly.

10. Advanced Data Structures

Occasionally, changing data structures proves to be a beneficial solution. The data structure collections.deque should be employed for queues, and bisect provides efficient sorting for lists. The optimized data structures accelerate processing and let you handle data effectively.

Performance Recommendations for Optimizing Python Code

Once you understand the techniques, here are recommendations to keep your Python code efficient.

1. Profile First: Identify Bottlenecks

Before modifying your code, you must know the specific problem locations. Use tools like cProfile, Py-Spy, or memory profilers to do this. Profiling allows you to target the specific areas that need enhancement.

Example: Using cProfile

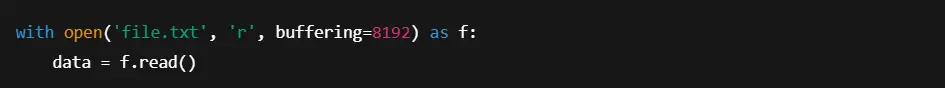

2. Optimize I/O Operations

The processes related to I/O operations slow down your program’s execution flow. Asynchronous I/O with asyncio or buffering should be used for file operations in the code. This method will decrease the waiting time for your code while performing disk accesses.

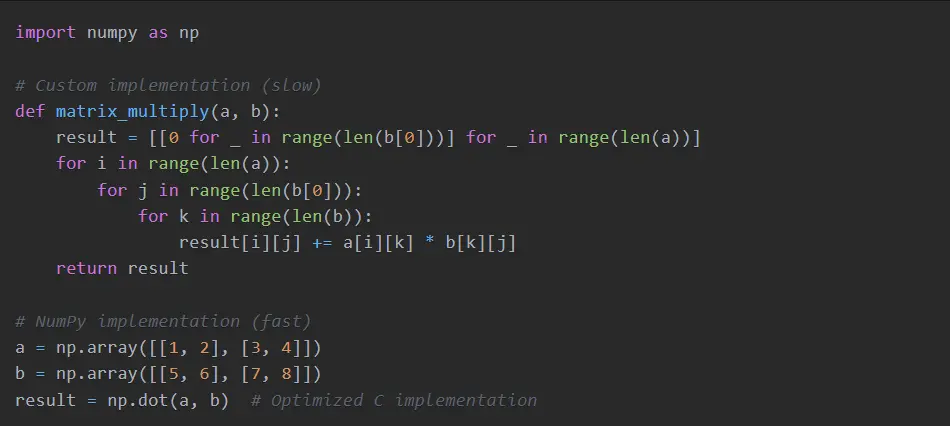

3. Leverage Specialized Libraries

The specialized libraries NumPy, Pandas, and TensorFlow optimize their performance definitions. You should integrate these libraries whenever possible to replace custom code bases.

Example: Matrix Multiplication with NumPy

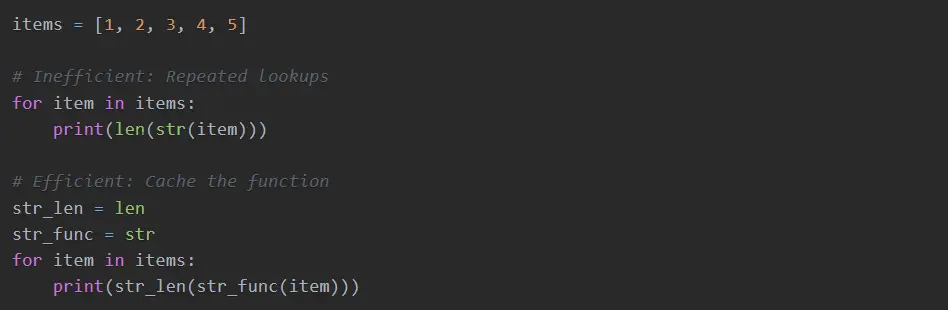

4. Minimize Function Calls in Loops

During tight loop execution, function calls produce excessive overhead. Your program will benefit from local storage of repeated function calls. The implementation of local storage enables a single lookup reduction.

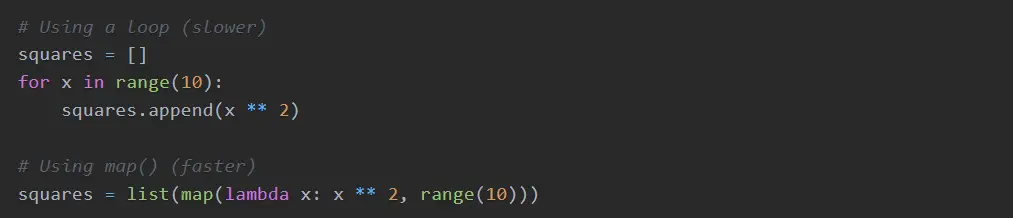

5. Use Built-in Functions

The built-in functions in Python use C programming to provide their rapid execution. Users should utilize these functions when creating their code. Built-in functions in Python, such as map(), filter(), and list comprehensions, exist as C libraries that outperform custom loops.

Example: Using map() vs. a Loop

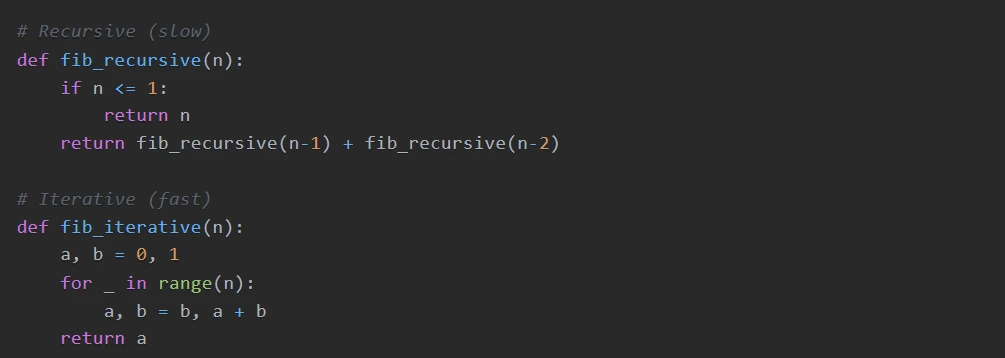

6. Avoid Recursion

The deep recursion process consumes a large amount of memory and stack space. Where possible, use iteration. This approach eliminates the overhead expenses that appear with recursive calls.

Example: Fibonacci Sequence

7. Efficient String Handling

The “+” operator used within loops causes slower performance, so a better solution would be to use the .join() method. The “.join” method performs string concatenation much more efficiently than the ‘+’ operator.

Bad:

Better:

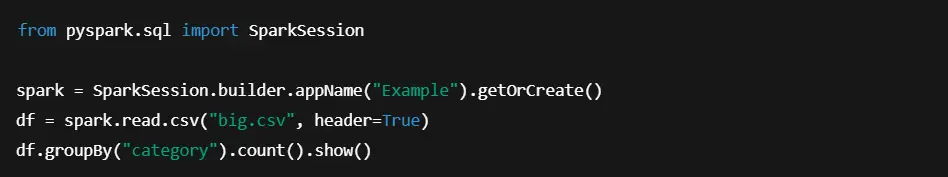

8. Hardware Acceleration

When dealing with significant tasks, select GPUs or Apache Spark frameworks. Extending work across different hardware components using these tools generates faster execution times for comprehensive computational tasks.

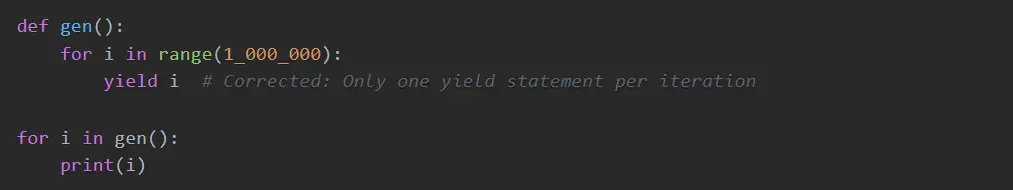

9. Lazy Evaluation

The Dask library and Generators allow data processing on a demand basis. Lazy evaluation saves memory, preventing the loading of complete extensive data collections into memory storage.

10. Continuous Monitoring

To preserve Python applications’ high-performance levels, you must continuously monitor their behavior. One can integrate New Relic, Datadog, or Prometheus and provide insight into how the application stays healthy and performs so that issues might be resolved ahead of the curve to give users a seamless experience while optimizing resources efficiently. Continuous monitoring is a cornerstone of modern software development, whether for web apps, data pipelines, or scientific simulations.

Example: New Relic Integration

These recommendations will ensure your Python applications work efficiently, scale beautifully, and satisfy user expectations.

Conclusion

Performance matters, especially when large-grown code is running at scale. You do not have to rewrite it into a different language; Python can be made quickly and efficiently with the right tools and techniques.

Proceed with profiling. Fix the slow parts. Use built-in features, smart libraries, and improved algorithms. If required, include Cython, GPU power, or Dask. As always, make the code clean and simple.

Optimizing Python code for performance isn’t over-engineering; it’s about making wise choices that keep your programs fast and reliable.

Great rundown on Python optimization! I especially appreciated the point about balancing performance with maintainability—it’s easy to get caught up in micro-optimizations and forget about code readability. Would love to see more examples of real-world bottlenecks and how these tips have been applied to solve them.

So, Cython is a thing? I always thought it was just Python’s cooler cousin who went to the gym. I guess if you wanna be fast, you gotta ditch the fluff and hit the ‘C’ button!